How to Fine-Tune LLMs with LoRA and QLoRA: A Practical Guide

Dec 31, 2025 · Learn how to fine-tune large language models efficiently using LoRA and QLoRA. Complete guide with code examples, hyperparameter tuning, and production deployment …

Understanding Low-Rank Adaptation (LoRA): A Revolution in Fine-Tuning …

Jan 3, 2026 · A Blog post by Ashish Chadha on Hugging Face

Fine-Tuning using LoRA and QLoRA - GeeksforGeeks

Jun 20, 2025 · This approach allows you to efficiently fine-tune massive models on standard GPUs, combining aggressive memory savings with the parameter efficiency of LoRA and …

LoRA for Fine-Tuning LLMs explained with codes and example

Oct 31, 2023 · With every passing day, we get something new, be it a new LLM like Mistral-7B, a framework like Langchain or LlamaIndex, or fine-tuning techniques.

LoRA Fine Tuning: Revolutionizing AI Model Optimization

Jul 21, 2025 · LoRA (Low-Rank Adaptation) is a parameter-efficient fine-tuning technique designed to adapt large pre-trained language models (LLMs) such as GPT-3 or BERT to …

LoRA Fine-Tuning Tutorial: Reduce GPU Memory Usage by 90

May 29, 2025 · LoRA (Low-Rank Adaptation) fine-tuning reduces memory usage by 90% while maintaining model performance. This tutorial shows you how to implement LoRA fine-tuning …

Efficient Fine-Tuning with LoRA for LLMs | Databricks Blog

Aug 30, 2023 · Explore efficient fine-tuning of large language models using Low Rank Adaptation (LoRA) for cost-effective and high-quality AI solutions.

Fine-Tuning Made Easy: A Beginner's Guide to LoRA

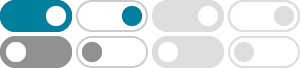

So, during fine-tuning, you only update A and B, while the original weight matrix remains unchanged. This way, you're effectively adapting the model to your specific task with minimal …

LoRA Fine-tuning & Hyperparameters Explained (in Plain English)

Nov 24, 2023 · In this article, we’ll explain how LoRA works in plain English. You will gain an understanding of how it’s similar and different to full-parameter fine-tuning, what is going on …

- [PDF]

LoRA Fine-Tuning

LoRA vs Partial Freezing * Computer vision models, for example get fine-tuned by freezing all but last 3 layers of CNN model on the fine-tuned data set In the context of LLMs - What are the …